Contents

1. Introduction

The primary purpose of the UKFE ‘R’ package is to implement the regional flood frequency methods outlined by the Flood Estimation Handbook (FEH) and associated updates. However, there are numerous other functions of use to the hydrologist. This quick guide is for the core FEH functions which lead to extreme flow estimates from single site or pooling (gauged & ungauged) and a design hydrograph using ReFH. There is no assumption that readers of this guide are experienced ‘R’ users. There is an assumption that the reader is familiar with the application of the FEH method.

For more information about the functions discussed here and further functions of the package, all the functions are detailed in a pdf via the following link:

https://cran.r-project.org/web/packages/UKFE/UKFE.pdf

Details can also be viewed in the package documentation within the ‘R’ environment. If using RStudio, you can click on packages, search for UKFE and then click on it. You will then see a list of functions; each can be clicked for more details. If you’re using base ‘R’ (it’s a bit more opaque) to get help with individual functions you can type help(FunctionName) or ?FunctionName. To get a list of the function names type ls("package:UKFE"). This latter approach also works in RStudio.

Once R is open, to get access to the package it must be installed (this only needs to be done once) and then loaded. The following commands can be entered into the console to do so. Afterwards you are ready use the package

install.packages("UKFE")library(UKFE)Table 1 provides the key functions for implementing the FEH methods:

Table 1: Key UKFE functions for implementing the FEH methods

2. Getting catchment descriptors

Catchment descriptors can be brought into the ‘R’ environment by the GetCDs function for gauged sites that are suitable for pooling or QMED. For example they can be viewed by using the GetCDs function as follows (assuming your gauged reference is 39001):

GetCDs(39001)It’s useful to store them as an ‘object’ for use with other functions. In which case you can give them a name and attribute the name to the object with ‘<-‘. For example:

CDs.39001 <- GetCDs(39001)Then when you wish to view them, the object name CDs.39001 can be entered into the console.

If you wish to derive CDs for catchments that aren’t suitable for pooling or QMED, or aren’t gauged at all, you can use the CDsXML function. The file path will need to be used and for windows operating systems, the backslashes will need to be changed to forward slashes. For example, importing some descriptors downloaded from the FEH webserver:

CDs.MySite <- CDsXML("C:/Data/FEH_Catchment_384200_458200.xml")Or if importing CDs from the NRFA peak flows download:

CDs.4003 <- CDsXML("C:/Data/NRFAPeakFlow_v11/Suitable for QMED/4003.xml")3. Quick results

Once you have some catchment descriptors you can start to use many of the FEH based functions. The full process, covered shortly, is encompassed in the QuickResults function. This provides results from a default pooling group for gauged or ungauged catchments and automatically accounts for urbanisation and provides a two donor transfer if gauged = FALSE. The default distribution is the generalised logistic distribution. First, we’ll get some CDs for a site suitable for pooling so we can do a fake ungauged estimate and a gauged estimate. Then the following code provides estimate and plots for the gauged and fake ungauged case.

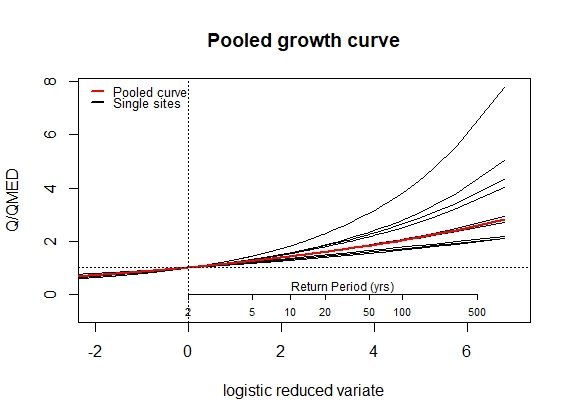

CDs.55002 <- GetCDs(55002)QuickResults(CDs.55002, gauged = TRUE)Figure 1: Default gauged growth curve for site 55002

Table 2: Results of the default ESS pooling for site 55002

The fake ungauged quick results estimate removes the pooled site of interest from the process and implements an ungauged assessment.

QuickResults(CDs.55002, FUngauged = TRUE) Figure 2: Default fake ungauged growth curve for site 55002

The default is ungauged, in which case, for a catchment with no gauge, it’s used in the same way, but excluding the second argument. If the site is not urban and the CDs are from a site suitable for pooling, the site itself will be included in this latter case (so it won’t be truly ungauged unless we use FUngauged = TRUE).

4. Estimating QMED

There is a QMED function which provides the ungauged QMED estimate and can also provide one or two donor estimates. For the first case it is used as:

QMED(CDs.55002)If the site is urbanised there will be a warning and a suggestion to change the UrbAdj argument to TRUE.

To undertake a donor transfer, to improve the estimate, a list of donor candidates is necessary. For this there is the DonAdj function, which provides the closest 10 sites (geographically) suitable for QMED, along with catchment descriptors. It also provides the donor adjusted result beside each one, in the last column.

DonAdj(CDs.55002)You can apply two donors by selecting from the list, the favoured two candidates and applying the QMED function with a vector of the site references. A vector in ‘R’ is simply a single sample of data, as opposed to a data frame or matrix. To create one, you can use c(datapoint1, datapoint2….datapointN). The following, then, provides a two-donor adjusted QMED:

QMED(CDs.55002, Don2 = c(55007, 55016))Urban donors should be avoided, but in the case that the subject catchment is rural, and the donor is urban, the ungauged QMED estimate of the donor (or donors) can be urban adjusted by setting the DonUrbAdj argument to TRUE.

5. Creating a pooling group

To create a pooling group, the Pool function can be used. If it is for a gauged site it will be included in the group automatically unless it is urban, in which case it will not. If you wish to include the urban site, the iug argument can be set to TRUE. In which case you may also wish to set the DeUrb argument to TRUE, to de-urbanise the subject site so that the estimate can undergo urban adjustment after. To create and view the pooled group:

Pool.55002 <- Pool(CDs = CDs.55002)Pool.55002You may now wish to assess the group for heterogeneity and see which distribution fits best. The H2 function can check for heterogeneity.

H2(Pool.55002)It seems that this site is heterogenous. In which case you may wish to use the DiagPlots function to check for any outliers etc. You can also compare with the discordancy measure which can be seen in the second to last column of the pooling group created.

DiagPlots(Pool.55002)Let’s pretend that site 8010 and site 76017 are to be removed. In this case, only because they have the highest discordancy. We will also pretend a more thorough analysis was undertaken. To remove the sites, we recreate the pooling group and use the exclude option. The function automatically adds the next site/s with the lowest similarity distance measure if the total number of AMAX in the group has dropped below 500 (or whatever we set as the N argument when using the Pool function, which has a default of 500).

Pool.55002 <- Pool(CDs = CDs.55002, exclude = c(8010, 76017))Using the H2 function again the heterogeneity appears to have reduced but the group is still not considered homogenous. We will leave it at that for now and check for the best distribution.

There are two function to do this and they both compare the GEV and the Generalised logistic distributions. The first is the Zdist outlined in FEH volume 3.

Zdists(Pool.55002)In this case it is the generalised logistic distribution that appears to fit best. The second is the goodness of tail fit function ‘GoTF’. This more specifically looks at the tail of the pooled data and doesn’t use the kappa distribution as the Zdist approach does. Instead, it bootstraps the standardised pooled data. (Note that this following function is not available as of UKFE version 0.3.5 but may return)

GoTFPool(Pool.55002)In this case a score for both the tail coefficient of variation and the mean are returned. Whichever score is the highest suggests the better fit. Again, in this case, the generalised logistic distribution appears to be the best fit.

The L-cv and L-Skew for each site is provided in the pooling group and is derived from the NRFA AMAX data. If the user has further information, for example, an extra year of data to provide a new annual maximum. The LCV and LSKEW can be calculated using the Lcv & LSkew functions. The user’s data can be pasted in or read in to the ‘R’ environment. To paste in use:

MyDate <- scan()Then press control v.

Then use Lcv(MyData) and LSkew(MyData) to derive the LMoment ratios.

The relevant results can be updated in the pooling group by applying the LRatioChange() function. For example if you wanted a new pooling group with updated LMoment ratios for site 55002, the following code can be applied.

UpdatedPool <- LRatioChange(Pool.55002, SiteID = 55002, lcv = 0.187, lskew = 0.164)6. Final estimates (the growth curve and results)

To derive the final estimate the PoolEst function is applied to our pooling group. The QMED option is always required for this function because the user may have further information and data than that provided by the NRFA peak flows AMAX data. We will first get a QMED estimate (in this case we will use the data available from NRFA). To get an estimate of the QMED we can either use the GetAM function and look at the data or we can get it more directly from the QMEDData embedded within the package. The following code provides the latter.

GetQMED(55002)We can use functions within functions so our use of PoolEst can contain the above command

PoolEst(Pool.55002, QMED = GetQMED(55002), gauged = TRUE)The EVPool function can be used to plot a growth curve (which should look like figure 1).

EVPool(Pool.55002, gauged = TRUE)For the ungauged catchment, the same process can be followed. First, we’ll exclude the site from our pooling group. We will assume we went through a rigorous pooling group analysis and concluded all was well:

PoolUG.55002 <- Pool(CDs.55002, exclude = 55002)Then estimate QMED from the catchment descriptors with two donors:

CDsQmed.55002 <- QMED(CDs.55002, Don2 = c(55007, 55016))Use the pooling group and the ungauged QMED estimate to provide the pooled estimates, and then plot the pooled growth curve:

Results55002 <- PoolEst(PoolUG.55002, QMED = CDsQmed.55002)and print the results and plot a frequency curve for the pooling group.

Results55002EVPool(PoolUG.55002)7. Design Hydrograph using ReFH and exporting results

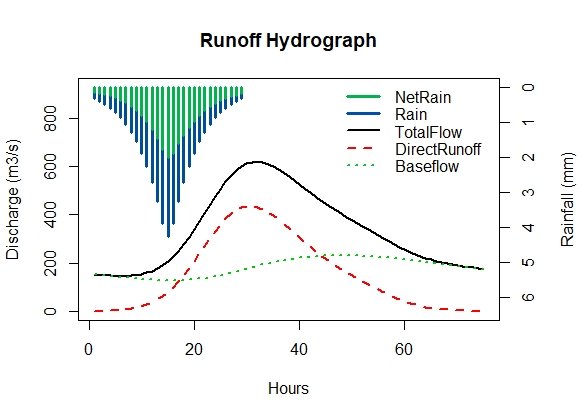

The ReFH function is designed for flexibility and each parameter of the model, along with the inputs and initial conditions can be user defined. In this example, it is being used to derive a design hydrograph. i.e. a hydrograph shape that is scaled to our design flood estimate (assuming the site to be ungauged). The default use of ReFH with the CDs provides the rainfall runoff outputs for a two-year design rainfall event:

ReFH(CDs.55002)Figure 3: Default ReFH output for catchment 55002

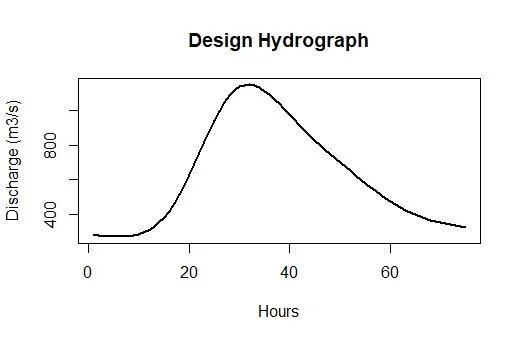

To scale it to the design flow estimate the results from the ungauged pooling can be used (assuming this is for the ungauged case). Looking at the estimate again:

Results55002The results are in the form of a list. The first element provides the return levels and the 100-year flow estimate is on the seventh row and second column. We’ll make it an object and use it to scale the hydrograph:

Q100.55002 <- Results55002[[1]][7,2]ReFH(CDs.55002, scaled = Q100.55002)Figure 4: Scaled ReFH hydrograph

For use outside of ‘R’ these outputs can be saved as objects and then written to csv files.

Results.55002 <- PoolEst(PoolUG.55002, QMED = CDsQmed.55002)[[1]]DesignHydro.55002 <- ReFH(CDs.55002, scaled = Q100.55002)write.csv(Results.55002, "my/file/path/Results55002.csv", row.names = FALSE)write.csv(DesignHydro.55002, "my/file/path/DesHydro55002.csv", row.names = FALSE)8. Single Site Analysis

The quickest way to get results for a single sample are the GEV, GenLog, Gumbel, and GenPareto functions. For example, for a 75-year return period estimate for an AMAX sample called MyAMAX and using the generalised logistic distribution:

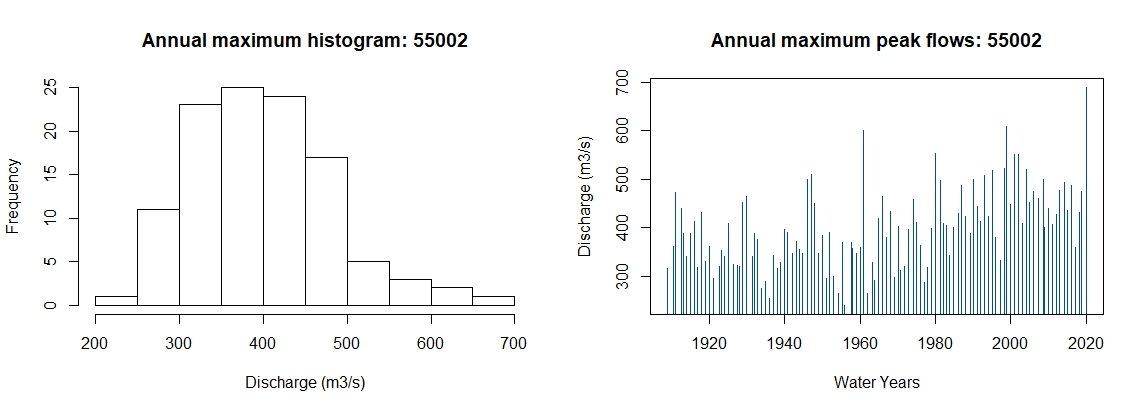

GenLogAM(MyAMAX, RP = 75)An annual maximum series can be obtained for sites suitable for pooling using the GetAM function. For all other AM series available from the NRFA peaks flows, the ImportAM function can be used. If you have a timeseries of flow with associated timestamp, the AMextract function can be used to extract the water year AMAX. For now, we will get and plot the AMAX for our site 55002 and plot.

AM.55002 <- GetAM(55002)AMplot(AM.55002)Figure 5: AMAX histogram and bar plot for site 55002

8.1 Annual maximums

For each of the GEV, GenLog, Gumble, and GenPareto distributions there are four functions; one that estimates return levels or return periods directly from the AM or POT series, one that does the same with an input of parameters, a third that provides the growth factor as a function of Lcv, Lskew and RP, and one for estimating parameters from a AM or POT series (using Lmoments or MLE).

With our AMAX, we can check the fit of the distributions using the GoTF. (Note that the GoTF function is not available as of UKFE version 0.3.5 but may return)

GoTF(AM.55002$Flow, dist = "GenLog")GoTF(AM.55002$Flow, dist = "GEV")GoTF(AM.55002$Flow, dist = "Gumbel")We can also compare them in the ERPlots or EVPlots. We’ll look at the Extreme Rank plot and the extreme value plots using the GEV: (Note that the ERPlot function is not available as of UKFE version 0.3.5 but will return)

ERPlot(AM.55002$Flow, dist = "GEV", Title = "Site 55002: GEV")EVPlot(AM.39001$Flow, dist = "GEV", Title = "Site 55002 GEV")Figure 6: Extreme rank plot with goodness of tail fit scores

It seems the GEV is the better fit for this site. As an example, we can estimate the 100-year flow. Then estimate the RP of the maximum flow observed - during the February 2020 flood (make sure to select the “Flow” column).

GEVAM(AM.55002$Flow, RP = 100)GEVAM(AM.55002$Flow, q = 689)Now estimate the parameters of the GEV from the data, using the default L-moments

GEVPars55002 <- GEVPars(AM.55002$Flow)GEVEst(GEVPars55002[1], GEVPars55002[3] , GEVPars55002[3], RP = 75)If we derive Lmoments for the site, we can then estimate the 50-year growth factor. The Lmoms function does this. We can also calculate LCV and LSKEW separately using the Lcv and LSkew functions. The growth factor can be calculated using the following code

GEVGF(lcv = Lcv(AM.55002$Flow), lskew= LSkew(AM.55002$Flow), RP = 50)Combining this latter result with the median, we can estimate the 50-year flow.

GEVGF(lcv = Lcv(AM.55002$Flow), lskew= LSkew(AM.55002$Flow), RP = 50) * median(AM.55002$Flow)8.2 Peaks over threshold

A POT series can also be obtained from a timeseries using the POTextract function. There is a daily sample of Thames at Kingston flow within the package that can be used for example purposes. The POTextract function can be used on a single vector or a data frame, with dates in the first column and the timeseries of interest in the second. As the Thames flow is in the third column and the date in the first, for the Thames data the following command can be used.

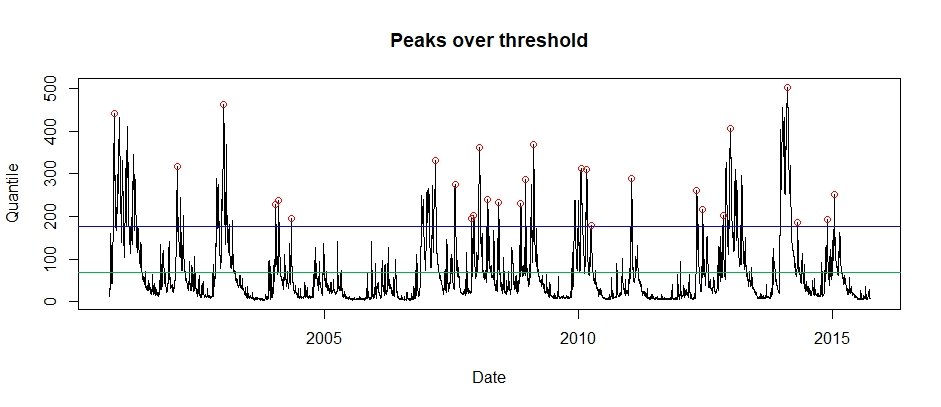

POT.Thames <- POTextract(ThamesPQ[,c(1,3)], thresh = 0.9)Figure 7: Peaks over threshold for the Thames at Kingson (2000 to 2015). The blue line is the threshold and the green is the mean flow line. Independent peaks are chosen above the threshold. If peaks are separated by a drop below the green line, they are considered independent.

The POT approach is the same except that we need to convert from the POT RP scale to the annual RP scale. To do so, the functions have a peaks per year (ppy) argument. The results of the POTextract function can provide the number of peaks per year (in this case 1.867). To estimate the associated 100-year flow the GenPareto function can be used as follows:

GenParetoPOT(POT.Thames$peak, ppy = 1.867, RP = 100)9. Uncertainty

The way to quantify uncertainty is provided here for three estimation methods, single site, ungauged pooling and gauged pooling. The single site and gauged pooling uncertainty is achieved by the method of bootstrapping. In the latter case the whole pooling group is bootstrapped, and the weighted estimates provided on every iteration. Uncertainty is provided as a default for the pooled estimates with “average” uncertainty methods.

9.1 Single Site

Extract an annual maximum sample with the following code.

AM.55002 <- GetAM(55002)The uncertainty for the 100-year flow can be estimated using the UncSS function is then:

UncSS(AM.55002$Flow, GEVAM, RP = 100)9.2 Pooling ungauged uncertainty

The Uncertainty() function can be used for the pooled estimates. The QMED, the number of donors, the RP and, the pooling group need to be used with the function. Furthermore, the factorial standard error needs to be provided for the ungauged case, using the QMEDfse argument (the default is 1.46). Estimate QMED first:

QMEDEst <- QMED(CDs.55002, Don2 = c(55007, 55016))Once we have the CDs object (CDs.55002) an ungauged pooling group for site 55002 can be created by:

PoolUG.55002 <- Pool(CDs.55002, exclude = 55002)Uncertainty(PoolUG.55002, qmed = QMEDEst)This provides the 68% and 95% intervals

9.3 Enhanced single site uncertainty

Gauged pooling, often called enhanced single site, is similar to the above but the gauged argument is set as TRUE and there is no need for the qmed and donors arguments. Previously we derived a gauged pooling group:

Pool.55002 <- Pool(CDs = CDs.55002, exclude = c(8010, 76017))The uncertainty for the 100-year flood is then quantified as:

Uncertainty(Pool.55002, gauged = TRUE, RP = 100)